Simple and seamless file transfer to Amazon S3 and Amazon EFS using SFTP, FTPS, and FTP

The AWS Transfer Family provides fully managed support for file transfers directly into and out of Amazon S3 or Amazon EFS. With support for Secure File Transfer Protocol (SFTP), File Transfer Protocol over SSL (FTPS), and File Transfer Protocol (FTP), the AWS Transfer Family helps you seamlessly migrate your file transfer workflows to AWS by integrating with existing authentication systems, and providing DNS routing with Amazon Route 53 so nothing changes for your customers and partners, or their applications. With your data in Amazon S3 or Amazon EFS, you can use it with AWS services for processing, analytics, machine learning, archiving, as well as home directories and developer tools. Getting started with the AWS Transfer Family is easy; there is no infrastructure to buy and set up.

- Couchdrop moves files to the cloud. The Cloud SFTP, FTP, SCP and Rsync server that works with Storage like Dropbox. Up and running in seconds. Fully hosted service.

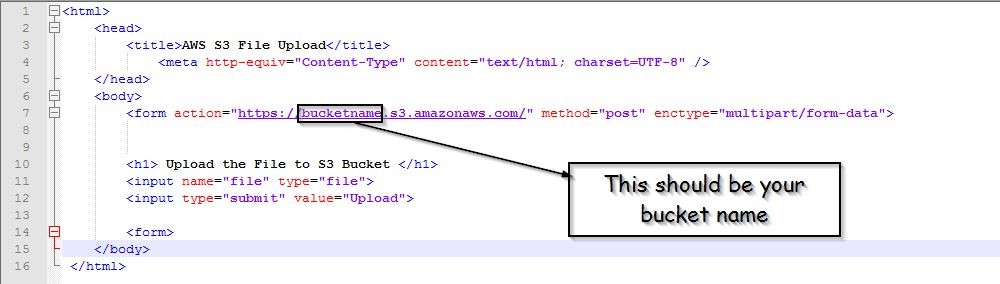

- This Lab explores a fully customizable, open source solution for using FTP to transfer files to an S3 bucket. The solution in this Lab also covers scenarios when you want to mount an S3 bucket on your local file system. In this Lab, you will learn to download, compile, and install the S3FS Fuse utility onto an Amazon Linux 2 based EC2.

FTP stands for File Transfer Protocol. This protocol governs the transfer of files between clients and servers online. In this case, the client gains the ability to copy, move, rename, delete, and download the files with FTP. The challenge is that you cannot use FTP without a server. By the way, building FTP is a complicated process.

Benefits

Easily and seamlessly modernize your file transfer workflows. File exchange over SFTP, FTPS, and FTP is deeply embedded in business processes across many industries like financial services, healthcare, telecom, and retail. They use these protocols to securely transfer files like stock transactions, medical records, invoices, software artifacts, and employee records. The AWS Transfer Family lets you preserve your existing data exchange processes while taking advantage of the superior economics, data durability, and security of Amazon S3 or Amazon EFS. With just a few clicks in the AWS Transfer Family console, you can select one or more protocols, configure Amazon S3 buckets or Amazon EFS file systems to store the transferred data, and set up your end user authentication by importing your existing end user credentials, or integrating an identity provider like Microsoft Active Directory or LDAP. End users can continue to transfer files using existing clients, while files are stored in your Amazon S3 bucket or Amazon EFS file system.

No servers to manage

You no longer have to purchase and run your own SFTP, FTPS, or FTP servers and storage to securely exchange data with partners and customers. The AWS Transfer Family manages your file infrastructure for you, which includes auto-scaling capacity and maintaining high availability with a multi-AZ architecture.

Seamless migrations

The AWS Transfer Family is fully compatible with the SFTP, FTPS, and FTP standards and connects directly with your identity provider systems like Active Directory, LDAP, Okta, and others. For you, this means you can migrate file transfer workflows to AWS without changing your existing authentication systems, domain, and hostnames. Your external customers and partners can continue to exchange files with you, without changing their applications, processes, client software configurations, or behavior.

Works natively with AWS services

The service stores the data in Amazon S3 or Amazon EFS, making it easily available for you to use AWS services for processing and analytics workflows, unlike third party tools that may keep your files in silos. Native support for AWS management services simplifies your security, monitoring, and auditing operations.

How it works

Get a hands-on understanding of how the AWS Transfer Family can help address your file transfer challenges by watching this quick demo.

Use cases

Sharing and receiving files internally and with third parties

Exchanging files internally within an organization or externally with third parties are a critical part of many business workflows. This file sharing needs to be done securely, whether you are transferring large technical documents for customers, media files for a marketing agency, research data, or invoices from suppliers. To seamlessly migrate from existing infrastructure, the AWS Transfer Family provides protocol options, integration with existing identity providers, and network access controls, so there are no changes to your end users. The AWS Transfer Family makes it easy to support recurring data sharing processes, as well as one-off secure file transfers, whichever suits your business needs.

Data distribution made secure and easy

Providing value added data is a core part of many big data and analytics organizations. This requires being able to easily provide accessibility to your data, while doing it in a secure way. The AWS Transfer Family offers multiple protocols to access data in Amazon S3 or Amazon EFS, and provides access control mechanisms and flexible folder structures that help you dynamically decide who gets access to what and how. You also no longer need to worry about managing the scale in growth of your data sharing business as the service provides built-in real-time scaling and high availability capabilities for secure and timely transfers of data.

Ecosystem data lakes

Whether you are part of a life sciences organization or an enterprise running business critical analytics workloads in AWS, you may need to rely on third parties to send you structured or unstructured data. With the AWS Transfer Family, you can set up up your partner teams to transfer data securely into your Amazon S3 bucket or Amazon EFS file system over the chosen protocols. You can then apply the AWS portfolio of analytics and machine learning capabilities on the data to advance your research projects. You can do all this without buying more hardware to run storage and compute on-premises.

Customers

Customers are using AWS Transfer for SFTP, AWS Transfer for FTPS, and AWS Transfer for FTP for a variety of uses cases. Visit the customers page to read about their experiences.

Gravador de audio online.

Transfer Family Blogs

No blog posts have been found at this time. Please see the AWS Blog for other resources.

To read more AWS Transfer Family blogs, please visit the AWS Storage blog channel.

What's New with Transfer Family

- date

Please see the AWS What's New page for recent launches.

AWS Transfer Family is designed to simplify file transfer operations for you. These capabilities make it possible.

Instantly get access to the AWS Free Tier,

including S3 storage for your files.

Get started building your SFTP, FTPS, and FTP services in the AWS Management Console.

02/26/19

Motivation

It is not so rare that we as developers land in a project where the customer uses SFTP (SSH File Transfer Protocol) for exchanging data with their partners. Actually, I can hardly remember a project where SFTP wasn't in the picture. In my last project, for example, the customer was using field data loggers that were logging and transferring measured values to an on-premises SFTP server. But what was different that time was that we were building a serverless solution in AWS. As you probably know, when an application operates in the AWS serverless world, it is absolutely essential to have your data in S3, so it can easily be used with other AWS services for all kinds of purposes: processing, archiving, analytics, and so on.

What we needed was a mechanism to poll the SFTP server for new files and move them into the S3 bucket. As a result, we built a custom serverless solution with combination of AWS managed services. It is reasonable to ask why we didn't use AWS Transfer for SFTP. While the answer is simple (it didn't exist at that time), I think a custom solution still maintains its value for small businesses, where traffic is not heavy and the SFTP server is already part of the existing platform. If this sounds interesting, keep on reading to find out more.

From SFTP to AWS S3: What you will read about in this post

- Custom solution for moving files from SFTP to S3

- In-depth description of the architecture

- Solution constraints and limitations

- Full source code

- Infrastructure as Code

- Detailed guide on how to run it in AWS

- Video instructions

The architecture

Transfer Family Blogs

No blog posts have been found at this time. Please see the AWS Blog for other resources.

To read more AWS Transfer Family blogs, please visit the AWS Storage blog channel.

What's New with Transfer Family

- date

Please see the AWS What's New page for recent launches.

AWS Transfer Family is designed to simplify file transfer operations for you. These capabilities make it possible.

Instantly get access to the AWS Free Tier,

including S3 storage for your files.

Get started building your SFTP, FTPS, and FTP services in the AWS Management Console.

02/26/19

Motivation

It is not so rare that we as developers land in a project where the customer uses SFTP (SSH File Transfer Protocol) for exchanging data with their partners. Actually, I can hardly remember a project where SFTP wasn't in the picture. In my last project, for example, the customer was using field data loggers that were logging and transferring measured values to an on-premises SFTP server. But what was different that time was that we were building a serverless solution in AWS. As you probably know, when an application operates in the AWS serverless world, it is absolutely essential to have your data in S3, so it can easily be used with other AWS services for all kinds of purposes: processing, archiving, analytics, and so on.

What we needed was a mechanism to poll the SFTP server for new files and move them into the S3 bucket. As a result, we built a custom serverless solution with combination of AWS managed services. It is reasonable to ask why we didn't use AWS Transfer for SFTP. While the answer is simple (it didn't exist at that time), I think a custom solution still maintains its value for small businesses, where traffic is not heavy and the SFTP server is already part of the existing platform. If this sounds interesting, keep on reading to find out more.

From SFTP to AWS S3: What you will read about in this post

- Custom solution for moving files from SFTP to S3

- In-depth description of the architecture

- Solution constraints and limitations

- Full source code

- Infrastructure as Code

- Detailed guide on how to run it in AWS

- Video instructions

The architecture

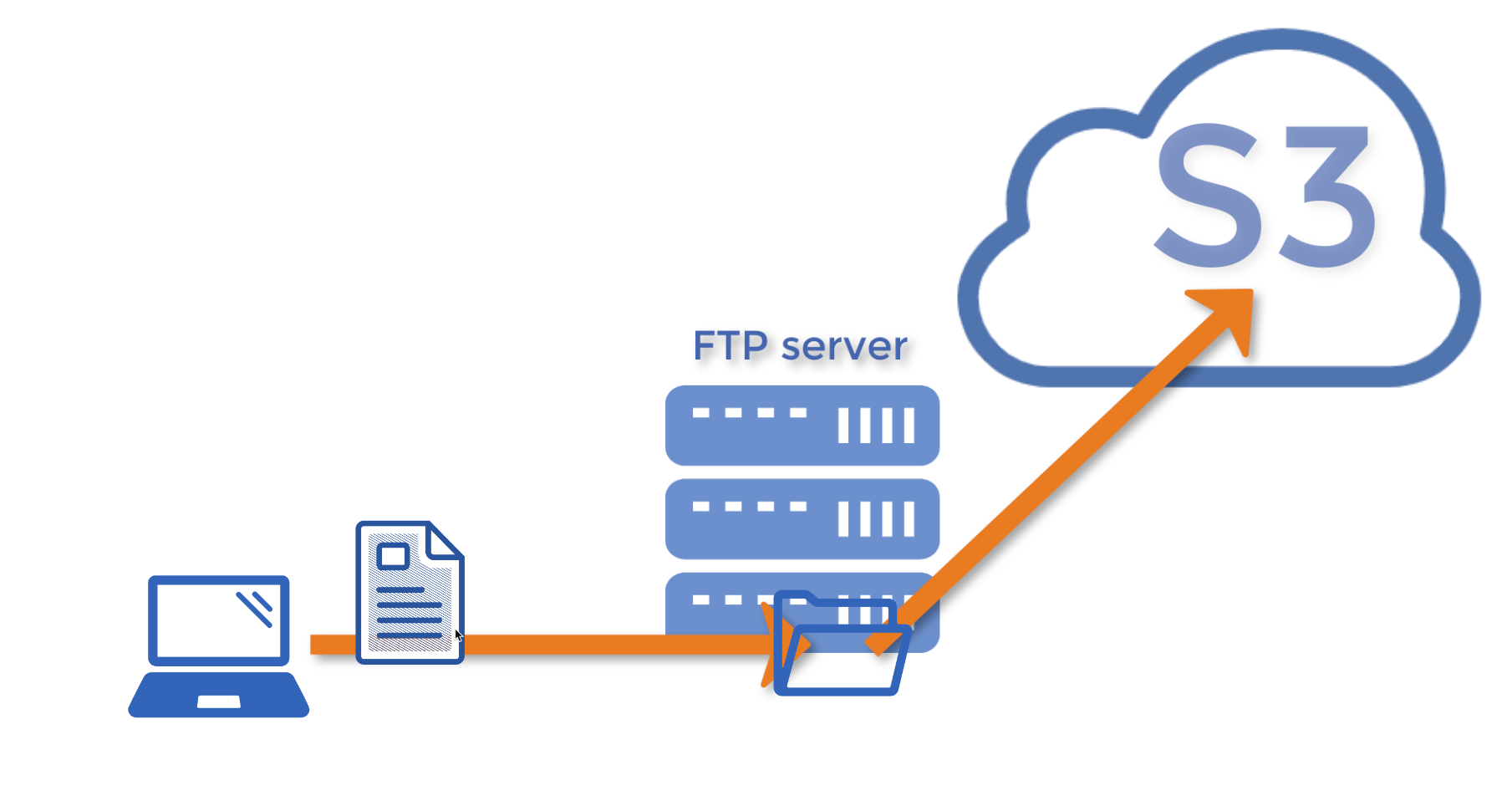

Let's briefly start by explaining what our solution will do. It will scan an SFTP folder and it will move (meaning both copy & delete) all files from it into an S3 bucket. Actually, it doesn't have to be only one folder/bucket pair, you can configure as many source and destination pairs as you want. Another important thing to ask is: when does it get executed? It does so based on a schedule. You will use a Cron expression to schedule the execution, so it is pretty flexible there.

The following is a list of AWS services and tech stacks in use:

How it works

CloudWatch Event is scheduled to trigger Lambda, and Lambda is responsible for connecting to SFTP and moving files to their S3 destination.

This approach requires only one Lambda to be deployed, because it is source- (SFTP folder) and destination- (S3 bucket) agnostic. When CloudWatch Event triggers Lambda, it passes the source and destination as parameters. You can deploy a single Lambda, and many CloudWatch Events that will all trigger the same Lambda, but with different source/destination parameters.

Node.js and Lambda: Connect to FTP and download files to AWS S3

The centerpiece is a Node.js Lambda function. It uses the ftp client module for communicating with FTP server. Every time CloudWatch Event triggers Lambda, it will execute this method:

It iterates through the content of the given folder and moves each file to the S3 bucket. As soon as the file is successfully moved, it removes the file from its original location.

Notice event.ftp_path and event.s3_bucket in the code above. They are coming from the CloudWatch Event Rule definition, which will be described in a following section.

CloudWatch Event Rule

CloudWatch Event is scheduled to trigger Lambda by creating CloudWatch Event Rules. Macintosh hd out of space. Every Rule consists of Cron expression and Input Constant. Input Constant is exactly the mechanism we can use to pass source and destination.

Now, when you take a look at the signature of the handler, you'll see an ImportFilesEvent:

This is exactly the value of the Input Constant and it is shown in the logged output as:

FTP connection parameters

FTP connection parameters are stored in another AWS Service called Parameter Store. Parameter Store is a nice way for storing configuration and secret data. Value is stored as JSON:

When Lambda executes readFtpConfiguration(), it reads the FTP Configuration from Parameter Store.

Limitations and constraints

Be aware that this is not a solution to synchronization of SFTP and S3, neither is it in real time. Don't expect that as soon as file is uploaded to SFTP, it will appear on S3. It will execute on schedule.

Another thing is how much data it can handle. AWS Lambda has its limitations. Since this solution is built to scan entire folder and transfer all files from it, if there are too many files, or files are very large, it can happen that Lambda hits one of its limits. It works well when there were dozen of files and each file was never larger than a few KBs.

If there are network issues during transfer, Lambda will break, but since Amazon CloudWatch Events invokes Lambda functions asynchronously, it will retry execution. But I encourage you to explore its limits on your own, and let me know in the comments section if you see how to build more resilience for failures.

Tests are missing. Testing Lambda is another big topic and I wanted to focus on the architecture instead. However, you can refer to another blogpost to find out more about this topic.

Run the code with Terraform

To use Lambda and other AWS services, you need an AWS account. If you don't have an account, see Create and Activate an AWS Account.

Another thing you'll need to install is Terraform, as well as Node.js.

When everything is set up, run git clone to get a copy of the repository, where the full source code is shared.

You will run this code in a second. But before that, you'll need to make two changes. First, go to the provision/credentials/ftp-configuration.json and put real SFTP connection parameters. Yes, this means you will need an SFTP server, too. This code will try to download folders named source-one and source-two, so make sure you have them created.

Second, go to the provision/variables.tf and change the value of default attribute. AWS has that rule for naming S3 buckets – names should be globally unique. You will use this parameter to achieve this uniqueness.

Next, build the Node.js Lambda package that will produce Lambda-Deployment.zip required by terraform.

When Lambda-Deployment.zip is ready, start creating the infrastructure.

If you prefer video instructions, have a look here: Video 4 movies showing.

Ftp Upload To S3 E2

Now, you should see a success message Apply complete! Resources: 17 added, 0 changed, 0 destroyed.. At this point all AWS Resources should be created and you can check them out by logging in to AWS Console. Navigate to the CloudWatch Event Rule section and see the Scheduler timetable, to find information when Lambda will be triggered. In the end, you should see files moved from

1. source-one FTP folder –>destination-one-id S3 bucket and

2. source-two FTP folder –>destination-two-id S3 bucket

Ftp Upload To S3 Sim Card

Summary: Going serverless by moving files from SFTP to AWS S3

S3 Ftp Server

This was a presentation of a lightweight and simple solution for moving files from more traditional services to serverless world. It has its limitations for larger-scale data, but it proves stable for smaller-sized businesses. I hope it will help you or serve as an idea when you encounter a similar task. Thank you for reading.